In my travels as a management consultant focusing on testing and quality in the enterprise, I see a lot of well-intended “symptom treating” in agile/CICD/devops transitions. Recently I’ve been advising one of the biggest mergers in the industry on combining their testing operations and how to “transform” into a leaner model. I haven’t blogged in while, but people have been asking me about some of the workshops I’ve been taking them through with a particular interest in metrics (as usual), so I figured this was as good a topic as any to start writing again.

I spent a couple days with them pulling apart their old metrics scorecard and given my well-document skepticism about trying to “measure quality” you could reasonably call it confirmation bias, but some interesting problems were observed by the team that were driving bad decisions. To start, I took the team through their metrics scorecard using the lens I use for viewing enterprise quality programs, which could be summed up in this question: “Who needs to know what and when to make strategic decisions?” Period. If it doesn’t support answering that question then in my experience there is very little, if any, point in collecting them.

Further to that, I believe that quality is a multi-dimensional attribute that is highly biased and impossible to quantify. Despite all our attempts, I also find it pointless to report software quality in linear or binary statistics in part because it’s heavily reliant on anecdotal evidence. That’s because software systems act like biological networks comprised of complex problems involving too many unknowns and relationships to reduce to rules and processes. It is my experience that software quality can only be managed not solved, and that over-testing a system builds in fragility.

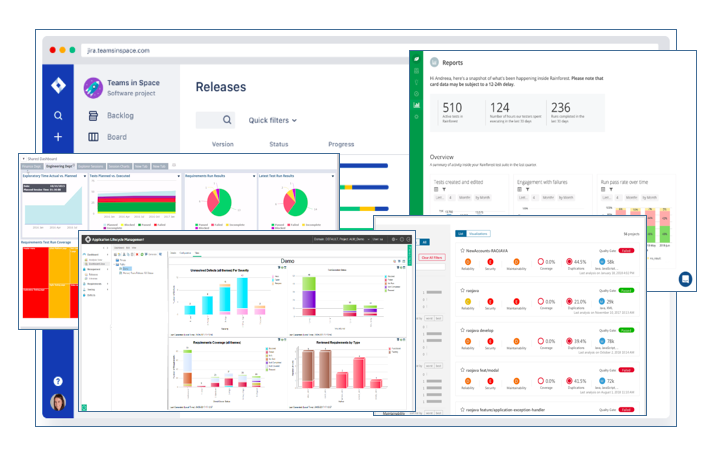

Exhibit A: Really crappy metrics reports…

Despite that, I have yet to see a test management tool or metrics program that isn’t highly misleading if used as designed, which means they are probably disregarded by anyone making a decision other than how many testers to fire. That’s because even today, with all the advances in technology, test tools and development practices, software quality’s primary measure in enterprise tech is still “#passed tests = quality”. This pervasive obsession with counting tests drives massive amounts of dysfunction in teams leading to bad decisions on automation and proposing technical solutions to cultural problems, but mostly provides a false sense of security that we know what our testing is actually doing.

Needless to say, the program I’m reviewing right now was focused on those exact things instead of risks. The goal of testing to provide information to people who need to make decisions for their business had been displaced by distractions. As I took the team through what decisions have been made based off of the current reporting (Release decisions? Value realization? Resource allocation? (people/process/technology)) they realized that there were validity problems with ~90% of their metrics.

We’re currently completing an exercise to model quality within their context, but they now realize it’s a difficult problem to manage not solve. When it comes to reporting on quality and testing our industry can do a LOT better, but in my opinion, success lies somewhere in the nexus of machine learning, visual test models, model-based testing and monitoring. For now, when it comes to reporting on enterprise software quality, a good deal of “I don’t know” would go a long way towards solving the problem, but ultimately we need a way to “see” the problem differently through lenses that more accurately reflect the difficult nature of reporting on quality.

Pingback: The 14 Most Inspiring Software Testing Articles I’ve Ever Read - The QA Lead